A (the?) primary essence of agile is to focus on delivering value-adding functionality as often as possible. Value-adding, that is, from the point of view of your consumer.

[Quick aside: we most often think of this in terms of value-adding 'application software', but certainly not by rule - it is whatever it is that is of value to your consumer. If you don't have a consumer, or if you aren't bringing value, then why are you spending time on it? Anyway...]

The implication of this is that many tasks we perform, while in most instances are in fact (hopefully) necessary, do not in and of themselves provide any real 'customer' value. In software terms, for example, this means that while "analysis" or "coding" or "testing" activities might in fact be necessary, they are not what our consumer/customer seeks…it is "a working feature" only that truly provides the value.

Let's bring into the discussion that holy agile grail we call a "story" (a symbol/moniker for a value-adding feature) - it is assumed that all the tasks that have to occur to deliver a story will occur, but alas, we simply should not be giving or getting any cookies for completing these tasks. We get cookies only for delivering [running, tested] features; we get cookies for delivering value.

More to the point of where I'm going with this, its not really to any benefit then to explicitly track these tasks (ie, track, meaning in your reports, "backlog" if that's a tool of yours, or things similar). Why? Well, primarily, simply because it is the story (feature) we strive to deliver, not necessarily analysis or code, etc.

(Taking a bit of a tangent here, but its an important one...) "Deliver value as often as possible". The further implication here is that we also strive to break the feature up into smaller and smaller pieces (stories); the smaller, the quicker it can be delivered, eh? And, further, the smaller the more likely to have an accurate estimate; the easier to design in an evolutionary way; the easier to test; etc. But in the end, with respect to our "stories", no matter how small we split, we do not split by tasks - each split in and of itself must still provide some sort of demonstrable value. Anyhoo...

"So Mike," you say, "where in the world are you going with all this?!?!". Great question, here it is: (MBark of this post) Do away with your "burndown charts".

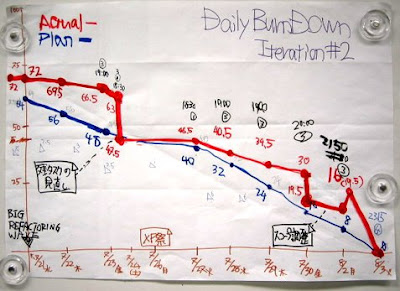

The sprint "burndown" [pictured left], at least as prescribed by Scrum, is tracking tasks. Being we like not to focus on tasks, and being we only get cookies for completed stories, doesn't a burndown focus us on the wrong thing? Yes, it does. Bad burndown, bad. In addition to focusing us on the wrong things, it burdens us to worry about too many things; to, in many ways, encourage us to micro-manage (which, of course, is not good for anybody and very un-agile). If you need more, how about the fact that it can lead quite readily to false-negatives with respect to how "done" you really are: "great, Bob, you've got task x, y, and z's hours all used up, sweet!"...but, uh, what the heck does that really mean with respect to the status of obtaining your real goal (delivering the feature)?

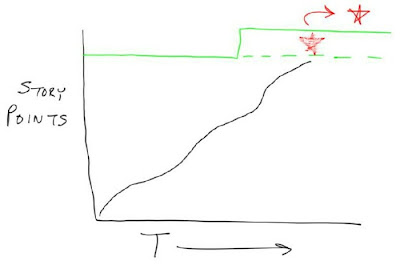

Thus, a "burnup" [pictured right]. A burnup tracks only features (whatever that might mean in your context); the features represented by stories, each assigned a point value to indicate size. When a story is complete (a feature is "done"), the burn goes "up" by that feature's points. No hours, no tasks, no minutiae about various random "inputs" - rather, just a simple representation of what we really care about - features being output. Easy-peasy, and way more in line with our goals. And, of course, as far as contributing as a tool to help us determine our velocity: if we really need to measure anything, what we really want to be measuring is our rate of delivering working software, not our rate of "doing analysis", right?

Be not mistaken by my message, it's not to say you and your team won't have daily knowledge of what particular tasks might be going on, I sure as hooha hope you do! It's just that we don't go so far as to officially track or measure them. That's what your daily stand-up is for. And, of course, common sense is required, if you really want them to be visible, fantastic (I mean it) - go ahead and hang up some task stickies on your Storyboard or some other "information radiator" hanging in your team room.

But, alas, my spidey-sense says: unless you're hoping to burn down your project, steer clear of the Burndown chart.